Represents the various scheduling strategies for parallel for loops. Detailed explanations of each scheduling strategy are provided alongside each getter. If no schedule is specified, the default is Schedule.Static. More...

Public Member Functions | |

| abstract void | LoopInit (int start, int end, uint num_threads, uint chunk_size) |

| Abstract method for builtin schedulers to override for implementing IScheduler. More... | |

| abstract void | LoopNext (int thread_id, out int start, out int end) |

| Abstract method for builtin schedulers to override for implementing IScheduler. More... | |

Properties | |

| static Schedule | Static [get] |

| The static scheduling strategy. Iterations are divided amongst threads in round-robin fashion. Each thread gets a 'chunk' of iterations, determined by the chunk size. If no chunk size is specified, it's computed as total iterations divided by number of threads. More... | |

| static Schedule | Dynamic [get] |

| The dynamic scheduling strategy. Iterations are managed in a central queue. Threads fetch chunks of iterations from this queue when they have no assigned work. If no chunk size is defined, a basic heuristic is used to determine a chunk size. More... | |

| static Schedule | Guided [get] |

| The guided scheduling strategy. Similar to dynamic, but the chunk size starts larger and shrinks as iterations are consumed. The shrinking formula is based on the remaining iterations divided by the number of threads. The chunk size parameter sets a minimum chunk size. More... | |

| static Schedule | Runtime [get] |

| Runtime-defined scheduling strategy. Schedule is determined by the 'OMP_SCHEDULE' environment variable. Expected format: "schedule[,chunk_size]", e.g., "static,128", "guided", or "dynamic,3". More... | |

| static Schedule | WorkStealing [get] |

| The work-stealing scheduling strategy. Each thread gets its own local queue of iterations to execute. If a thread's queue is empty, it randomly selects another thread's queue as its "victim" and steals half of its remaining iterations. The chunk size parameter specifies how many iterations a thread should execute from its local queue at a time. More... | |

Static Private Attributes | |

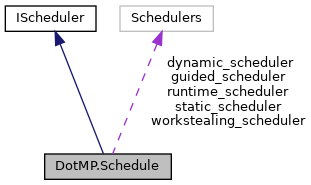

| static Schedulers.StaticScheduler | static_scheduler = new Schedulers.StaticScheduler() |

| Internal holder for StaticScheduler object. More... | |

| static Schedulers.DynamicScheduler | dynamic_scheduler = new Schedulers.DynamicScheduler() |

| Internal holder for the DynamicScheduler object. More... | |

| static Schedulers.GuidedScheduler | guided_scheduler = new Schedulers.GuidedScheduler() |

| Internal holder for the GuidedScheduler object. More... | |

| static Schedulers.RuntimeScheduler | runtime_scheduler = new Schedulers.RuntimeScheduler() |

| Internal holder for the RuntimeScheduler object. More... | |

| static Schedulers.WorkStealingScheduler | workstealing_scheduler = new Schedulers.WorkStealingScheduler() |

| Internal holder for the WorkStealingScheduler object. More... | |

Detailed Description

Represents the various scheduling strategies for parallel for loops. Detailed explanations of each scheduling strategy are provided alongside each getter. If no schedule is specified, the default is Schedule.Static.

Member Function Documentation

◆ LoopInit()

|

pure virtual |

Abstract method for builtin schedulers to override for implementing IScheduler.

- Parameters

-

start The start of the loop, inclusive. end The end of the loop, exclusive. num_threads The number of threads. chunk_size The chunk size.

Implements DotMP.IScheduler.

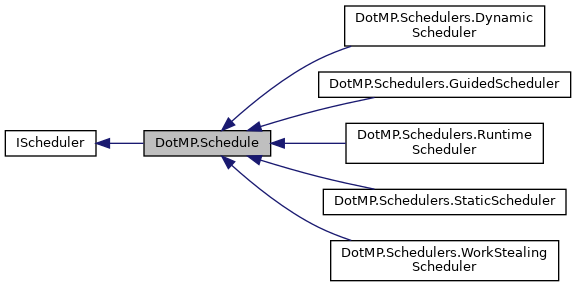

Implemented in DotMP.Schedulers.WorkStealingScheduler, DotMP.Schedulers.RuntimeScheduler, DotMP.Schedulers.GuidedScheduler, DotMP.Schedulers.DynamicScheduler, and DotMP.Schedulers.StaticScheduler.

◆ LoopNext()

|

pure virtual |

Abstract method for builtin schedulers to override for implementing IScheduler.

- Parameters

-

thread_id The thread ID. start The start of the chunk, inclusive. end The end of the chunk, exclusive.

Implements DotMP.IScheduler.

Implemented in DotMP.Schedulers.WorkStealingScheduler, DotMP.Schedulers.RuntimeScheduler, DotMP.Schedulers.GuidedScheduler, DotMP.Schedulers.DynamicScheduler, and DotMP.Schedulers.StaticScheduler.

Member Data Documentation

◆ dynamic_scheduler

|

staticprivate |

Internal holder for the DynamicScheduler object.

◆ guided_scheduler

|

staticprivate |

Internal holder for the GuidedScheduler object.

◆ runtime_scheduler

|

staticprivate |

Internal holder for the RuntimeScheduler object.

◆ static_scheduler

|

staticprivate |

Internal holder for StaticScheduler object.

◆ workstealing_scheduler

|

staticprivate |

Internal holder for the WorkStealingScheduler object.

Property Documentation

◆ Dynamic

|

staticget |

The dynamic scheduling strategy. Iterations are managed in a central queue. Threads fetch chunks of iterations from this queue when they have no assigned work. If no chunk size is defined, a basic heuristic is used to determine a chunk size.

Pros:

- Better load balancing.

Cons:

- Increased overhead.

◆ Guided

|

staticget |

The guided scheduling strategy. Similar to dynamic, but the chunk size starts larger and shrinks as iterations are consumed. The shrinking formula is based on the remaining iterations divided by the number of threads. The chunk size parameter sets a minimum chunk size.

Pros:

- Adaptable to workloads.

Cons:

- Might not handle loops with early heavy load imbalance efficiently.

◆ Runtime

|

staticget |

Runtime-defined scheduling strategy. Schedule is determined by the 'OMP_SCHEDULE' environment variable. Expected format: "schedule[,chunk_size]", e.g., "static,128", "guided", or "dynamic,3".

◆ Static

|

staticget |

The static scheduling strategy. Iterations are divided amongst threads in round-robin fashion. Each thread gets a 'chunk' of iterations, determined by the chunk size. If no chunk size is specified, it's computed as total iterations divided by number of threads.

Pros:

- Reduced overhead.

Cons:

- Potential for load imbalance.

Note: This is the default strategy if none is chosen.

◆ WorkStealing

|

staticget |

The work-stealing scheduling strategy. Each thread gets its own local queue of iterations to execute. If a thread's queue is empty, it randomly selects another thread's queue as its "victim" and steals half of its remaining iterations. The chunk size parameter specifies how many iterations a thread should execute from its local queue at a time.

Pros:

- Good approximation of optimal load balancing.

- No contention over a shared queue.

Cons:

- Stealing can be an expensive operation.

The documentation for this class was generated from the following file:

- DotMP/Schedule.cs